Decision Tree (Part 2): Machine Learning Interview Prep 06

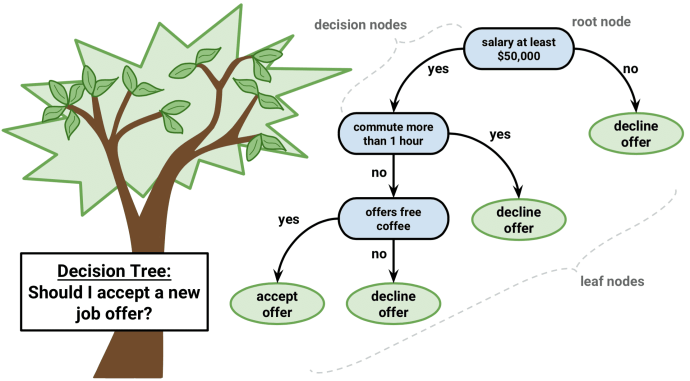

A Decision Tree is like a flowchart that helps a computer make decisions by sorting information into categories. It asks questions about the data, like a game of 20 questions, to figure out the best answer. Each question splits the data into smaller groups until it finds the best choice. Overall, Decision Trees are a handy tool for making predictions by organizing data into a tree-like structure.

Let’s check your basic knowledge of the Decision Tree. Here are 10 multiple-choice questions for you and there’s no time limit. Have fun!

Question 1: What is splitting in the decision tree?

(A) Dividing a node into two or more sub-nodes based on if-else conditions

(B) Removing a sub-node from the tree

(C) Balance the dataset prior to fitting

(D) All of the above

Question 2: What is a leaf or terminal node in the decision tree?

(A) The end of the decision tree where it cannot be split into further sub-nodes.

(B) Maximum depth

(C) A subsection of the entire tree

(D) A node that represents the entire population or sample

Question 3: What is pruning in a decision tree?

(A) Removing a sub-node from the tree

(B) Dividing a node into two or more sub-nodes based on if-else conditions

(C) Balance the dataset prior to fitting

(D) All of the above

Question 4: In the decision tree, the measure of the degree of probability of a particular variable being wrongly classified when it is randomly chosen is called _____.

(A) Pruning

(B) Information gain

(C) Maximum depth

(D) Gini impurity

Question 5: Suppose in a classification problem, you are using a decision tree and you use the Gini index as the criterion for the algorithm to select the feature for the root node. The feature with the _____ Gini index will be selected.

(A) maximum

(B) highest

(C) least

(D) None of these

Question 6: In a decision tree algorithm, entropy helps to determine a feature or attribute that gives maximum information about a class which is called _____.

(A) Pruning

(B) Information gain

(C) Maximum depth

(D) Gini impurity

Question 7: In a decision tree algorithm, how can you reduce the level of entropy from the root node to the leaf node?

(A) Pruning

(B) Information gain

(C) Maximum depth

(D) Gini impurity

Question 8: What are the advantages of the decision tree?

(A) Decision trees are easy to visualize

(B) Non-linear patterns in the data can be captured easily

(C) Both

(D) None of the above

Question 9: What are the disadvantages of the decision tree?

(A) Over-fitting of the data is possible.

(B) The small variation in the input data can result in a different decision tree

(C) We have to balance the dataset before training the model

(D) All of the above

Question 10: In Decision Trees, for predicting a class label, the algorithm starts from which node of the tree?

(A) Root

(B) Leaf

(C) Terminal

(D) Sub-node

The solutions will be published in the next quiz Decision Tree (Part 3): Machine Learning Interview Prep 07.

If you like the questions and enjoy taking the test, please subscribe to my email list for the latest ML questions, follow my Medium profile, and leave a clap for me. Feel free to discuss your thoughts on these questions in the comment section. Don’t forget to share the quiz link with your friends or LinkedIn connections. If you want to connect with me on LinkedIn: my LinkedIn profile.

The solution of Decision Tree (Part 1): Machine Learning Interview Prep 05 - 1(A), 2(D), 3(C), 4(A), 5(A), 6(C), 7(A), 8(A), 9(B), 10(A)

References:

[1] Decision Tree Hugging: https://towardsdatascience.com/decision-tree-hugging-b8851f853486

[2] Decision Tree Algorithm, Explained: https://www.kdnuggets.com/2020/01/decision-tree-algorithm-explained.html

[3] Decision Trees in Machine Learning: https://towardsdatascience.com/decision-trees-in-machine-learning-641b9c4e8052